♦ 🐆 0 min, 🐌 1 min

Coding with openLLMs

How to add support for open LLM models in gpt-engineer ? Notes 👇 are relevant for general use of open LLMs in software development projects.Backstory

On 2024-01-25 I decided to join thegpt-engineer developers meeting. My plan was to blend in the crowd and see how the project is positioned. Then pick a small issue and try to solve it. You know since this being my first open source project I was trying to contribute to.But to my surprise I was one of the 4 people in the meeting. So got my task right away. Support in gpte for open source models. Right into the deep end 😅

MacBook Pro Max3 with 128 GB's of ram the day before and wanted to test the 💰 toy I just bought. As always the task involved a lot of thinking and far less coding.Specs

Inference library

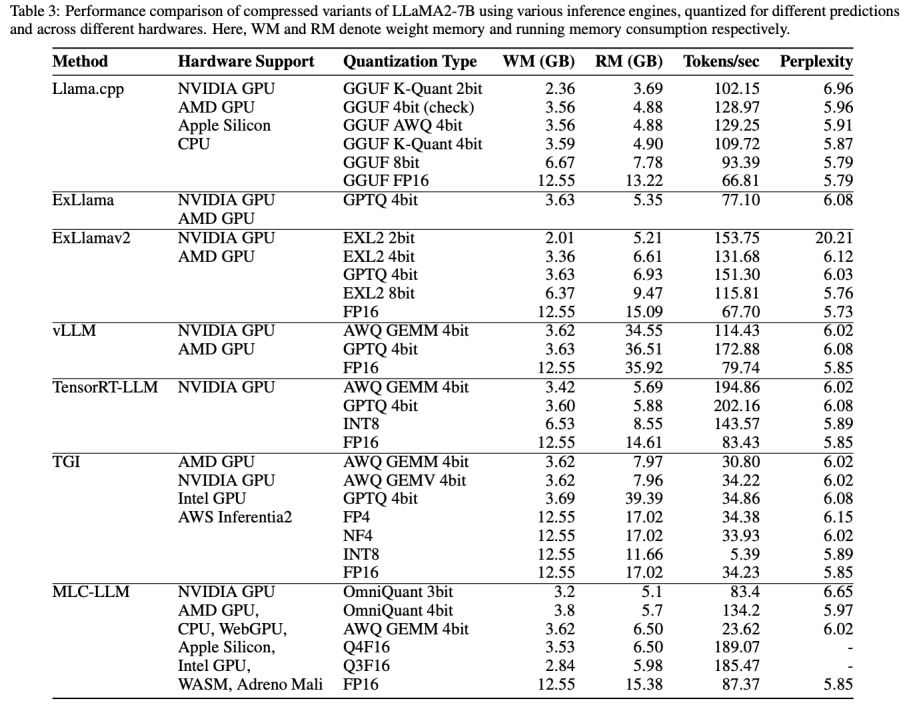

There are several options on the market:llama.cppllama-cpp-pyollamavLLMExLLamaMLC-LLM

llama.cpp and it's python bindings llama-cpp-py have the broadest hardware support. See:

Example

Choice of a model

Model type:- WizardCoder

- Mixtral

- CodeLlama

Get notified & read regularly 👇